Bet ya bite a chip

I wanted to get something out earlier, but I've had a bit of nasty fever for the past few days. I promised in one of the blog comments to release the scripts that I am using for testing. I will do this very soon (probably in the next blog entry). I have some cleanup that I need to do.

fwrite->strace

In one of my past entries I mentioned that I was having trouble strace'ing startx, and that is what prevented me from using Federico's original patches. Well, that was beginning to bite me. First, my fwrite method did not handle threading very well. A message could get split a log was sent simultaneously by two different threads. (I think I could fix that though). Second, and more important, Federico's excellent visualization script wouldn't work.

I determined that figuring out how to get strace to work was really the only way to go.

First, it appears that the "SUID" problem was with strace and NOT with SELinux as I originally thought. According to the strace man-page it is possible to create a suid strace binary, and that will allow suid binaries to work properly. I created an suid-root strace, and that allowed me to "strace X". However, when I tried to launch gnome with this binary it gave me errors (some thing about "use a helper application for SUID stuff").

I had to take a different approach, so I changed my .xinitrc to launch an straced version of gnome rather than stracing startx directly. The suid-root X would start normally, and then the stracing would begin. That worked!

Here's my currently working .xinitrc:

.xinitrc

#!/bin/bash

exec strace -e clone,execve,open,access -ttt -f -o /tmp/gnome.log /home/gnome/bin/jhbuild run gnome-session

Notice that I added the "-e" option to Federico's default "-ttt -f" command line. This will ONLY record the clone, execve,open,access system calls. This speeds up the run-time, and reduces the amount of stuff that needs to be dumped in the log file.

All is not rosey

Unfortunately, now that am using strace, the start-up times seem a bit more sluggish. However, I have to update my scripts to automatically figure out how long things are taking. (I've just been reading log times manually.)

Also, and more importantly, my session will no longer shutdown by itself with strace turned on. I don't know if this is a bug in my distribution (with strace/ptrace) or an actual race within the gnome code. It makes it a real pain when I want to run 40 tests because I have to sit there and press cntrl-alt-backspace. Even then things don't get properly cleaned up. I am going to work on a big hammer to fix this.

1000 words

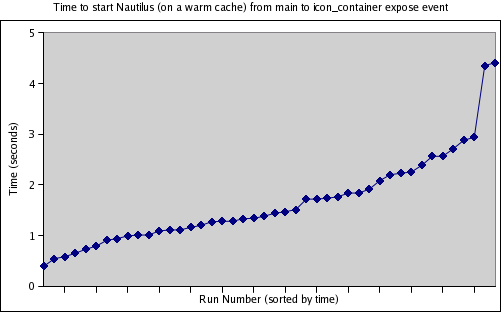

Federico suggested that I rearrange the order of nautilus vs. gnome-panel in the gnome-session file, and see if that changes the variability that I have been seeing. I don't have a definitive answer on that yet. However, I did notice that nautilus's execution does become interlaced with the gnome-vfs-daemon, and the gnome-panel. I wonder if it is possible to start the gnome-vfs-daemon before both of them, so nautilus won't have to wait for it to startup and/or compete with the panel for its resources. It would be really nice to know all of the dependencies of the gnome daemons. That way we could start the most needed ones earlier, and the non-conflicting ones in a parallel.

In Any event, here's a picture of my current startup. Note: most of the functions with a name of "(null)" are probably the gnome-vfs-daemon.

NOTE: The long pause at the end is because the shutdown of gnome has hung...

Note to ESD: DON'T EVER RUN

I noticed this before when I was shutting things down, but I saw it again on my logs, and it was really annoying me. I have esd completely disabled, yet on shutdown the esd process always starts.

I traced it down to "gnome-session-save". However, I don't yet know which part is calling it.

gnome-session-save calls this:

execve("/bin/sh", ["/bin/sh", "-c", "/home/gnome/gnome2/bin/esd -term"...], [/* 35 vars */]) = 0

which in turn calls this:

execve("/home/gnome/gnome2/bin/esd", ["/home/gnome/gnome2/bin/esd", "-terminate", "-nobeeps", "-as", "2", "-spawnfd", "15"], [/* 35 vars */]) = 0

This is the pebble in my shoe.

Cookbook

As I mentioned above, i don't yet have a cookbook that other people can follow in my footsteps and reproduce things. I will do that for the next blog. I need to do a little bit of clean-up, but then I can provide a patch.

fwrite->strace

In one of my past entries I mentioned that I was having trouble strace'ing startx, and that is what prevented me from using Federico's original patches. Well, that was beginning to bite me. First, my fwrite method did not handle threading very well. A message could get split a log was sent simultaneously by two different threads. (I think I could fix that though). Second, and more important, Federico's excellent visualization script wouldn't work.

I determined that figuring out how to get strace to work was really the only way to go.

First, it appears that the "SUID" problem was with strace and NOT with SELinux as I originally thought. According to the strace man-page it is possible to create a suid strace binary, and that will allow suid binaries to work properly. I created an suid-root strace, and that allowed me to "strace X". However, when I tried to launch gnome with this binary it gave me errors (some thing about "use a helper application for SUID stuff").

I had to take a different approach, so I changed my .xinitrc to launch an straced version of gnome rather than stracing startx directly. The suid-root X would start normally, and then the stracing would begin. That worked!

Here's my currently working .xinitrc:

.xinitrc

#!/bin/bash

exec strace -e clone,execve,open,access -ttt -f -o /tmp/gnome.log /home/gnome/bin/jhbuild run gnome-session

Notice that I added the "-e" option to Federico's default "-ttt -f" command line. This will ONLY record the clone, execve,open,access system calls. This speeds up the run-time, and reduces the amount of stuff that needs to be dumped in the log file.

All is not rosey

Unfortunately, now that am using strace, the start-up times seem a bit more sluggish. However, I have to update my scripts to automatically figure out how long things are taking. (I've just been reading log times manually.)

Also, and more importantly, my session will no longer shutdown by itself with strace turned on. I don't know if this is a bug in my distribution (with strace/ptrace) or an actual race within the gnome code. It makes it a real pain when I want to run 40 tests because I have to sit there and press cntrl-alt-backspace. Even then things don't get properly cleaned up. I am going to work on a big hammer to fix this.

1000 words

Federico suggested that I rearrange the order of nautilus vs. gnome-panel in the gnome-session file, and see if that changes the variability that I have been seeing. I don't have a definitive answer on that yet. However, I did notice that nautilus's execution does become interlaced with the gnome-vfs-daemon, and the gnome-panel. I wonder if it is possible to start the gnome-vfs-daemon before both of them, so nautilus won't have to wait for it to startup and/or compete with the panel for its resources. It would be really nice to know all of the dependencies of the gnome daemons. That way we could start the most needed ones earlier, and the non-conflicting ones in a parallel.

In Any event, here's a picture of my current startup. Note: most of the functions with a name of "(null)" are probably the gnome-vfs-daemon.

NOTE: The long pause at the end is because the shutdown of gnome has hung...

Note to ESD: DON'T EVER RUN

I noticed this before when I was shutting things down, but I saw it again on my logs, and it was really annoying me. I have esd completely disabled, yet on shutdown the esd process always starts.

I traced it down to "gnome-session-save". However, I don't yet know which part is calling it.

gnome-session-save calls this:

execve("/bin/sh", ["/bin/sh", "-c", "/home/gnome/gnome2/bin/esd -term"...], [/* 35 vars */]) = 0

which in turn calls this:

execve("/home/gnome/gnome2/bin/esd", ["/home/gnome/gnome2/bin/esd", "-terminate", "-nobeeps", "-as", "2", "-spawnfd", "15"], [/* 35 vars */]) = 0

This is the pebble in my shoe.

Cookbook

As I mentioned above, i don't yet have a cookbook that other people can follow in my footsteps and reproduce things. I will do that for the next blog. I need to do a little bit of clean-up, but then I can provide a patch.