Saturday, May 12, 2012

Monday, August 21, 2006

Thanks for All the Fish

Well, today SOC draws to a close, so I decided to write up how things currently stand. Thanks to all the Gnomers for letting me work on this!

Abstract

The point of this project was to investigate why nautilus's startup performance was slow, and to find different ways to fix the problem.

Nautilus's startup is slow because it keeps blocking and allows other applications to run.

Sometimes it is lucky and completes its startup quickly. Other times it isn't, and gnome-panel (and all of the plugins) or other startup applications get in the way.

Problem definition

Tools

The general problem

When I started this project, I had very little idea about the overall architecture of gnome. When do various components start up? Which pieces were responsible for which parts of the system? There is documentation for some of the individual components (Nautilus's documentation is pretty good), but nothing that describes the overall startup of the gnome desktop. There are quite a few articles describing the exact startup sequences of Linux from the boot loader to the init scripts. It would be helpful if there was something similar for gnome, so it was at least clear how things were intended to work.

The problem of understanding and analyzing the complex startup of gnome is actually a more general problem. Gnome starts multiple threads, and multiple processes, each with interlocking dependencies. A few general tools have been developed for Linux which help to understand these startup sequences. No single tool seems to have all of the features needed to truly understand what is happening, so I tried a bunch of different tools.

1) Bootchart (http://www.bootchart.org/)

The first tool that I used to determine how exactly things were starting up was the "bootchart" tool. This tool was originally designed to track the startup of programs in the Linux boot process. Fortunately it is also flexible enough to monitor and track any other set of processes that are started at a later date. It gives a nice graphical chart of when various processes started, and how much each used the cpu during the specified time period.

The initial bootchart images were the first indication that something was unusual about nautilus startup. Different charts of the same gnome startup sequence provided a radically different view when things started up, and how longs things took to start. Sometimes the panel would begin first, and sometimes the gnome-settings-daemon would. This was my first clue that some sort of race was happening. If there wasn't a race, each startup should have an identical series of steps, with little to no variability.

2) strace + 'access' hacks and Federico's visualization tool.

Next I started to use the analysis tools and techniques that others had used before me. In particular, I used strace to generate, and Federico's tool to analyze the result. This provided the most complete data. I've detailed in an earlier blog entry how to get it all started up.

Once thing I haven't mentioned yet, is that I wrote a small python script to sort through the strace results. This will replace "pids" with the actual name of the processes. This makes reading the strace results much easier. (It will trace clones, forks and execs, so you can see exactly what is running when...)

#!/usr/bin/python

import fileinput

import string

pid_to_name={}

thread=1

for line in fileinput.input():

__pid = line.split()[0]

__if pid_to_name.has_key(pid):

____start =line.find(" ")

____print pid_to_name[pid] + " " +line[start:-1]

__ else:

____print line[:-1]

__if "execve(" in line:

____param = line.split()[2]

____pid_to_name[pid] = param.split("\"")[1]

__if "clone(" in line:

____new_name = pid_to_name[pid] + (".%d"%thread)

____thread = thread + 1

____new_pid = line.split("=")[-1].strip()

____pid_to_name[new_pid] = new_name

__ if "<... clone resumed>" in line:

____new_name = pid_to_name[pid] + (".%d"%thread)

____thread = thread + 1

____new_pid = line.split("=")[-1].strip()

____pid_to_name[new_pid] = new_name

3) NPTL Trace Tool (http://nptltracetool.sourceforge.net/usm_example.php)

Next, I wanted to try to visualized how the different thread interacted with the hope that I would be able to see some clear patterns as to why nautilus was continually yielding the CPU.

The NPTL trace tool looked like promising avenue, because it had a graphical interface (using ntt_paje), and it was able to analyze a the complex interaction of threaded applications. It can provide a visualization, as well as statistics about the amount of locks held, and report on the various pthread calls that were made.

The trace tool produced the results into a format the Paje visualization tool could open and analyze. Paje itself is a little clunky and requires that the GNUstep environment is installed. I was able to capture a trace of a generic application, but unfortunately when I tried to run the trace tool an nautilus, it seg faulted.

4) frysk (http://sourceware.org/frysk/)

This was another tool that promised to make visualization and debugging of complicated threading applications/environments easy to do. It, unfortunately, is still immature. When I tried to monitor system calls that nautilus made, it also crashed. However, this project is one to watch. It provides a graphical interface analyze how, and allows you to add arbitrary rules to analyze how a process and set of threads were executing.

5) Ideal tool

The ideal tool would be one that shows both when applications are running, and why they vacate the CPU when they are finished. Strace is really a poor man's version of this tool. However, it doesn't have any way to indicate when the scheduler decided to swap the running process, so if an application spends all of its time in user space, the strace method will never detect that the running application has changed.

6) Dishonorable mention

Two tools that I tried to use, but were unsuccessful were AMD's code analyst and Intel's VTune.

AMD get props for releasing their Code Analyist as GPL. It provides a GUI interface to oprofile, and some other niceties. Unfortunately, the number of distributions that it supports is rather small, and it wouldn't build on my Fedora 5 box. Even worse (in my opinion) was Intel's vtune. They provided a binary which could be used on Linux for non-commercial profiling. However, once it detected that my processor was an AMD chip, it wouldn't let me use it.

Experiments: Summary

Since it may have been difficult to follow what happened over the course of the summer, I'm going to summarize my experiments, and show how I came to the conclusions that I did.

Setup

First, my goal was to work with "warm-booting" nautilus, after everything had been loaded into the disk cache. Second, I automated the startup and collection, so that I could run the same test repeatedly, and then analyze the results by either looking at visualizations, or running the tests through gnumeric.

Goals

My goals were to remove the variability in the nautilus startup, and minimize the overall nautilus startup time.

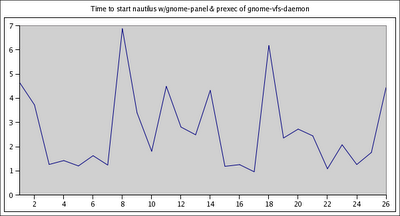

Test 1 Full startup

I added tracing information which started at the first line of "main" in nautilus, and stopped when the icons were painted on the screen. (in icon_container expose_event).

Result:

The startup times were highly variable, and other processes (such as those launched by gnome-panel) would run in between the startup of nautilus and the drawing of the icons.

Test 2 Full startup w/a high gnome-session priority

I edited the ".gnome/gnome-session" file, and set the priority of nautilus to 10, which was higher than that of gnome-panel.

Result:

No change. Nautilus was STILL interrupted by gnome-panel processes.

Test 3 Launch simply (no wm, no gnome-panel)

Next, I removed the "wm" and "gnome-panel" from the session file, and retimed nautilus's startup.

Result:

The nautilus startup times were still highly variable. Nautilus was being interrupted by the gnome-volume-manager, and the various daemons that were launched internally by gnome-session. (gnome-keyring)

One interesting part of this investigation was that sometimes "gnome_vfs_get_volume_monitor()" took and long time to start, and other times it was very quick. Federico had seen this, and he explained what was happening. Various gnome-daemons/services are launched on demand, and this call was causing this to take place.

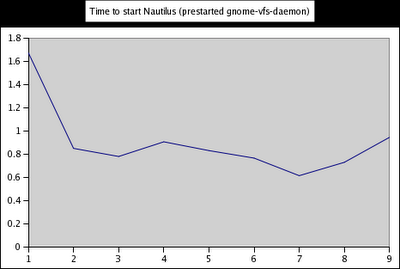

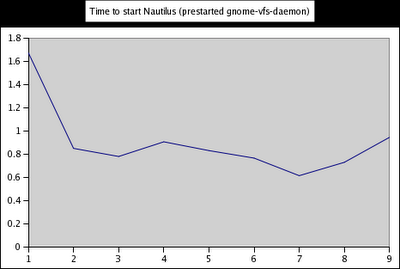

Test 3 Launch simply (no wm, no gnome-panel) w/a gnome-vfs preloader

I wanted to see if nautilus startup times would because a little, if the gnome-vfs-daemon was prestarted before nautilus started to launch. I modified the disk mount applet to call the gnome-volume-manager, and

exit. Then, I modified the gnome-session-manager to call this "gnome-vfs" preloader, and wait for 2 seconds while it started. The 2 second delay is an unacceptable "real" solution, but it was enough to see prestarting the daemon would increase startup time.

Result:

The variability totally disappeared, and the startup times dropped to about 1/2 second.

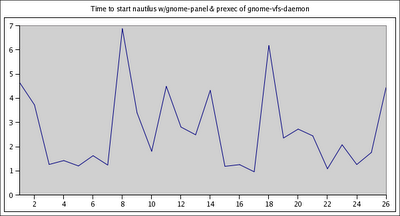

Test 4 Launch complete session w/preloader.

I decided to go for broke. I had the preloader within gnome-session, so I decided to try with gnome-panel and the gnome-wm turned back on.

Result:

unfortunately, the variability was back. Upon closer investigation the applications were still interrupting nautilus between the time it was initially launched and the first icons were painted on the screen.

Nautilus was yielding the cpu, and other applications (gnome-panel) were running before the nautilus startup could complete.

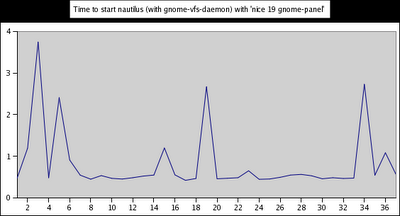

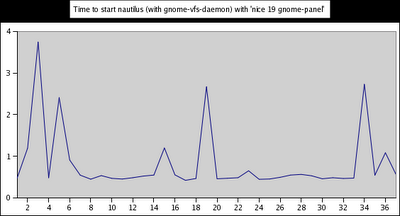

Test 5 Launch complete session w/preloader & niced gnome-panel.

Now, I wanted to see what happened if I made the nautilus the highest priority process, and basically made sure that it was the highest priority. However, since I was not able to easily boost its priority (without becomes root), I decided to drop the priority of the processes competing for the CPU. I ran the gnome-panel with a nice of 19.

Result:

Nautilus's startup was very snappy, but the gnome-panel never seemed to be painted. The variability was there because other applications (gnome-keyring) could still occasionally interrupt the startup.

Next Steps

To really solve the startup problem, nautilus probably has to be rearchitected to stop blocking or it must somehow be made a higher priority than the other applications that startup.

Here are some ideas on how to fix things:

0) Incorporate gnome-vfs startup in gnome-session

This should probably be done even if it doesn't completely solve the nautilus startup problem. gnome-vfs is needed by the gnome desktop. Launching it "on-demand" only causes blocking and unnecessary

contention. (I haven't tried this yet. )

1) Rearchitecture Nautilus to remove all of the yielding.

This is a bigger change than I could do. Even minimizing the number of times that it yields would reduce the chance that it would be interrupted.

2) Remove the on-demand loading (or at least short circuit it, so it is never used in a properly running desktop. )

IMHO, the on-demand loading is a bad idea. Ideally all of the dependencies for an application would be running before it starts. It would reduce the amount of times that applications block, and could

possibly reduce thrashing as multiple applications wait for a component to start.

3) Modify nautilus to give gnome-session a thumbs up ONLY after the icons have painted.

Right now, gnome-session and the applications that it launch have a handshake that wait for an application to start. This response handshake would have to be modified to only respond after the icons have been painted.

I've been working on getting this to work, but I can't figured out where the handshake takes place.

4) Boost nautilus's priority

If we can boost nautilus's priority, it may (possibly) not be interrupted. I couldn't figure out how to do this without root privileges.

Summary

In any event, this has been a lot of fun. I learned more about nautilus & gnome that I thought possible.

Thanks to all!

Abstract

The point of this project was to investigate why nautilus's startup performance was slow, and to find different ways to fix the problem.

Nautilus's startup is slow because it keeps blocking and allows other applications to run.

Sometimes it is lucky and completes its startup quickly. Other times it isn't, and gnome-panel (and all of the plugins) or other startup applications get in the way.

Problem definition

- minimize the amount of time between nautilus startup and mapping of the icons.

- Remove the variability in the gnome startup times.

Tools

The general problem

When I started this project, I had very little idea about the overall architecture of gnome. When do various components start up? Which pieces were responsible for which parts of the system? There is documentation for some of the individual components (Nautilus's documentation is pretty good), but nothing that describes the overall startup of the gnome desktop. There are quite a few articles describing the exact startup sequences of Linux from the boot loader to the init scripts. It would be helpful if there was something similar for gnome, so it was at least clear how things were intended to work.

The problem of understanding and analyzing the complex startup of gnome is actually a more general problem. Gnome starts multiple threads, and multiple processes, each with interlocking dependencies. A few general tools have been developed for Linux which help to understand these startup sequences. No single tool seems to have all of the features needed to truly understand what is happening, so I tried a bunch of different tools.

1) Bootchart (http://www.bootchart.org/)

The first tool that I used to determine how exactly things were starting up was the "bootchart" tool. This tool was originally designed to track the startup of programs in the Linux boot process. Fortunately it is also flexible enough to monitor and track any other set of processes that are started at a later date. It gives a nice graphical chart of when various processes started, and how much each used the cpu during the specified time period.

The initial bootchart images were the first indication that something was unusual about nautilus startup. Different charts of the same gnome startup sequence provided a radically different view when things started up, and how longs things took to start. Sometimes the panel would begin first, and sometimes the gnome-settings-daemon would. This was my first clue that some sort of race was happening. If there wasn't a race, each startup should have an identical series of steps, with little to no variability.

2) strace + 'access' hacks and Federico's visualization tool.

Next I started to use the analysis tools and techniques that others had used before me. In particular, I used strace to generate, and Federico's tool to analyze the result. This provided the most complete data. I've detailed in an earlier blog entry how to get it all started up.

Once thing I haven't mentioned yet, is that I wrote a small python script to sort through the strace results. This will replace "pids" with the actual name of the processes. This makes reading the strace results much easier. (It will trace clones, forks and execs, so you can see exactly what is running when...)

#!/usr/bin/python

import fileinput

import string

pid_to_name={}

thread=1

for line in fileinput.input():

__pid = line.split()[0]

__if pid_to_name.has_key(pid):

____start =line.find(" ")

____print pid_to_name[pid] + " " +line[start:-1]

__ else:

____print line[:-1]

__if "execve(" in line:

____param = line.split()[2]

____pid_to_name[pid] = param.split("\"")[1]

__if "clone(" in line:

____new_name = pid_to_name[pid] + (".%d"%thread)

____thread = thread + 1

____new_pid = line.split("=")[-1].strip()

____pid_to_name[new_pid] = new_name

__ if "<... clone resumed>" in line:

____new_name = pid_to_name[pid] + (".%d"%thread)

____thread = thread + 1

____new_pid = line.split("=")[-1].strip()

____pid_to_name[new_pid] = new_name

3) NPTL Trace Tool (http://nptltracetool.sourceforge.net/usm_example.php)

Next, I wanted to try to visualized how the different thread interacted with the hope that I would be able to see some clear patterns as to why nautilus was continually yielding the CPU.

The NPTL trace tool looked like promising avenue, because it had a graphical interface (using ntt_paje), and it was able to analyze a the complex interaction of threaded applications. It can provide a visualization, as well as statistics about the amount of locks held, and report on the various pthread calls that were made.

The trace tool produced the results into a format the Paje visualization tool could open and analyze. Paje itself is a little clunky and requires that the GNUstep environment is installed. I was able to capture a trace of a generic application, but unfortunately when I tried to run the trace tool an nautilus, it seg faulted.

4) frysk (http://sourceware.org/frysk/)

This was another tool that promised to make visualization and debugging of complicated threading applications/environments easy to do. It, unfortunately, is still immature. When I tried to monitor system calls that nautilus made, it also crashed. However, this project is one to watch. It provides a graphical interface analyze how, and allows you to add arbitrary rules to analyze how a process and set of threads were executing.

5) Ideal tool

The ideal tool would be one that shows both when applications are running, and why they vacate the CPU when they are finished. Strace is really a poor man's version of this tool. However, it doesn't have any way to indicate when the scheduler decided to swap the running process, so if an application spends all of its time in user space, the strace method will never detect that the running application has changed.

6) Dishonorable mention

Two tools that I tried to use, but were unsuccessful were AMD's code analyst and Intel's VTune.

AMD get props for releasing their Code Analyist as GPL. It provides a GUI interface to oprofile, and some other niceties. Unfortunately, the number of distributions that it supports is rather small, and it wouldn't build on my Fedora 5 box. Even worse (in my opinion) was Intel's vtune. They provided a binary which could be used on Linux for non-commercial profiling. However, once it detected that my processor was an AMD chip, it wouldn't let me use it.

Experiments: Summary

Since it may have been difficult to follow what happened over the course of the summer, I'm going to summarize my experiments, and show how I came to the conclusions that I did.

Setup

First, my goal was to work with "warm-booting" nautilus, after everything had been loaded into the disk cache. Second, I automated the startup and collection, so that I could run the same test repeatedly, and then analyze the results by either looking at visualizations, or running the tests through gnumeric.

Goals

My goals were to remove the variability in the nautilus startup, and minimize the overall nautilus startup time.

Test 1 Full startup

I added tracing information which started at the first line of "main" in nautilus, and stopped when the icons were painted on the screen. (in icon_container expose_event).

Result:

The startup times were highly variable, and other processes (such as those launched by gnome-panel) would run in between the startup of nautilus and the drawing of the icons.

Test 2 Full startup w/a high gnome-session priority

I edited the ".gnome/gnome-session" file, and set the priority of nautilus to 10, which was higher than that of gnome-panel.

Result:

No change. Nautilus was STILL interrupted by gnome-panel processes.

Test 3 Launch simply (no wm, no gnome-panel)

Next, I removed the "wm" and "gnome-panel" from the session file, and retimed nautilus's startup.

Result:

The nautilus startup times were still highly variable. Nautilus was being interrupted by the gnome-volume-manager, and the various daemons that were launched internally by gnome-session. (gnome-keyring)

One interesting part of this investigation was that sometimes "gnome_vfs_get_volume_monitor()" took and long time to start, and other times it was very quick. Federico had seen this, and he explained what was happening. Various gnome-daemons/services are launched on demand, and this call was causing this to take place.

Test 3 Launch simply (no wm, no gnome-panel) w/a gnome-vfs preloader

I wanted to see if nautilus startup times would because a little, if the gnome-vfs-daemon was prestarted before nautilus started to launch. I modified the disk mount applet to call the gnome-volume-manager, and

exit. Then, I modified the gnome-session-manager to call this "gnome-vfs" preloader, and wait for 2 seconds while it started. The 2 second delay is an unacceptable "real" solution, but it was enough to see prestarting the daemon would increase startup time.

Result:

The variability totally disappeared, and the startup times dropped to about 1/2 second.

Test 4 Launch complete session w/preloader.

I decided to go for broke. I had the preloader within gnome-session, so I decided to try with gnome-panel and the gnome-wm turned back on.

Result:

unfortunately, the variability was back. Upon closer investigation the applications were still interrupting nautilus between the time it was initially launched and the first icons were painted on the screen.

Nautilus was yielding the cpu, and other applications (gnome-panel) were running before the nautilus startup could complete.

Test 5 Launch complete session w/preloader & niced gnome-panel.

Now, I wanted to see what happened if I made the nautilus the highest priority process, and basically made sure that it was the highest priority. However, since I was not able to easily boost its priority (without becomes root), I decided to drop the priority of the processes competing for the CPU. I ran the gnome-panel with a nice of 19.

Result:

Nautilus's startup was very snappy, but the gnome-panel never seemed to be painted. The variability was there because other applications (gnome-keyring) could still occasionally interrupt the startup.

Next Steps

To really solve the startup problem, nautilus probably has to be rearchitected to stop blocking or it must somehow be made a higher priority than the other applications that startup.

Here are some ideas on how to fix things:

0) Incorporate gnome-vfs startup in gnome-session

This should probably be done even if it doesn't completely solve the nautilus startup problem. gnome-vfs is needed by the gnome desktop. Launching it "on-demand" only causes blocking and unnecessary

contention. (I haven't tried this yet. )

1) Rearchitecture Nautilus to remove all of the yielding.

This is a bigger change than I could do. Even minimizing the number of times that it yields would reduce the chance that it would be interrupted.

2) Remove the on-demand loading (or at least short circuit it, so it is never used in a properly running desktop. )

IMHO, the on-demand loading is a bad idea. Ideally all of the dependencies for an application would be running before it starts. It would reduce the amount of times that applications block, and could

possibly reduce thrashing as multiple applications wait for a component to start.

3) Modify nautilus to give gnome-session a thumbs up ONLY after the icons have painted.

Right now, gnome-session and the applications that it launch have a handshake that wait for an application to start. This response handshake would have to be modified to only respond after the icons have been painted.

I've been working on getting this to work, but I can't figured out where the handshake takes place.

4) Boost nautilus's priority

If we can boost nautilus's priority, it may (possibly) not be interrupted. I couldn't figure out how to do this without root privileges.

Summary

In any event, this has been a lot of fun. I learned more about nautilus & gnome that I thought possible.

Thanks to all!

Tuesday, August 01, 2006

Ah ha!

A whole bunch has happened since I blogged last....

For the most part, I figured out why nautilus startup time is so slow AND so variable.

Nautilus is basically yields the CPU again and again as it starts up. Even if nautilus is one of the first components launched, other components (ie, the panel, applets and more) will interrupt it, and prevent it from drawing the first icon on the screen. Sometimes nautilus has the CPU for most of the time, and the startup times are very good. However sometimes it will yield the CPU, and it will be seconds before it starts running again.

First Blind Alley

As you can see is some of my last posts, I knew that nautilus was yielding the CPU. However, I thought it was doing this because it was waiting for threads/components that weren't yet running. (In particular the gnome-vfs-daemon).

I initially thought that nautilus also relied on gnome-volume-manager since gnome-volume-manager's execution was often interleaved with nautilus's startup. However, I found one run where this didn't happen, and nautilus started up just fine.. Therefore it can't be a dependency. (I also discovered gnome-volume-manager was being launched by one of the gnome startup scripts)

I modified the trash applet to preload the vfs-daemon before nautilus started up. I modified gnome-session to call this hacked applet, wait 2 seconds, and then finally start nautilus. This guaranteed that gnome-vfs-daemon was started before nautilus started. This worked, sort-of, as nautilus start-up times were much more reproducible (as long as the panel wasn't running). It is an imperfect solution, because that sleep is really unacceptable, but it could tell me if dependencies were the problem.

The interesting thing to note is that nautilus blocked several times from when executing main to the icon-painting. Nautilus did some handshaking with various threads, and this caused things to block.

Nice-i-ness

With this fix in place, I went for broke and turned on the panel. The reproducible startup times that I had, were then completely shot to hell. All of the blocking of the main nautilus process provided opportunities for the panel & applet to run. Once they started, nautilus might not execute again for a couple of seconds.

So, on a whim, I decide to run the gnome-panel with a nice value of 19. This worked pretty well. Most of the nautilus startup times were on the order of 0.5 seconds. However, every so often, the times would jump. This was because other non-panel processes (such as gnome-volume-manager) started running when nautilus blocked.

What is Next?

I'm currently trying to figure out if I can reduce he amount of blocking that nautilus is doing.

First, I have to figure out exactly what it is waiting on.

For the most part, I figured out why nautilus startup time is so slow AND so variable.

Nautilus is basically yields the CPU again and again as it starts up. Even if nautilus is one of the first components launched, other components (ie, the panel, applets and more) will interrupt it, and prevent it from drawing the first icon on the screen. Sometimes nautilus has the CPU for most of the time, and the startup times are very good. However sometimes it will yield the CPU, and it will be seconds before it starts running again.

First Blind Alley

As you can see is some of my last posts, I knew that nautilus was yielding the CPU. However, I thought it was doing this because it was waiting for threads/components that weren't yet running. (In particular the gnome-vfs-daemon).

I initially thought that nautilus also relied on gnome-volume-manager since gnome-volume-manager's execution was often interleaved with nautilus's startup. However, I found one run where this didn't happen, and nautilus started up just fine.. Therefore it can't be a dependency. (I also discovered gnome-volume-manager was being launched by one of the gnome startup scripts)

I modified the trash applet to preload the vfs-daemon before nautilus started up. I modified gnome-session to call this hacked applet, wait 2 seconds, and then finally start nautilus. This guaranteed that gnome-vfs-daemon was started before nautilus started. This worked, sort-of, as nautilus start-up times were much more reproducible (as long as the panel wasn't running). It is an imperfect solution, because that sleep is really unacceptable, but it could tell me if dependencies were the problem.

The interesting thing to note is that nautilus blocked several times from when executing main to the icon-painting. Nautilus did some handshaking with various threads, and this caused things to block.

Nice-i-ness

With this fix in place, I went for broke and turned on the panel. The reproducible startup times that I had, were then completely shot to hell. All of the blocking of the main nautilus process provided opportunities for the panel & applet to run. Once they started, nautilus might not execute again for a couple of seconds.

So, on a whim, I decide to run the gnome-panel with a nice value of 19. This worked pretty well. Most of the nautilus startup times were on the order of 0.5 seconds. However, every so often, the times would jump. This was because other non-panel processes (such as gnome-volume-manager) started running when nautilus blocked.

What is Next?

I'm currently trying to figure out if I can reduce he amount of blocking that nautilus is doing.

First, I have to figure out exactly what it is waiting on.

Tuesday, July 18, 2006

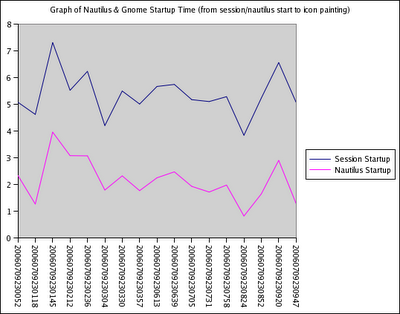

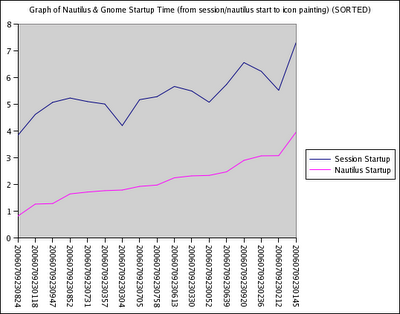

Startup Variability

I've figured out why the nautilus startup times are so variable.

I removed both the window manager AND gnome-panel from the startup sequence to do my testing. I figure if I can remove the variability when only launching nautilus, then I should be able to add back in the others and make sure that things don't break.

WHAT?

Even with gnome-session ONLY launching nautilus in the gnone-session file, nautilus still has highly variable startup times.

gnome-session starts a whole bunch of things internally, and then launches nautilus.

NOTE: I am currently running with ALL of the stracing turned on, so times may be slower than previous blog posts.

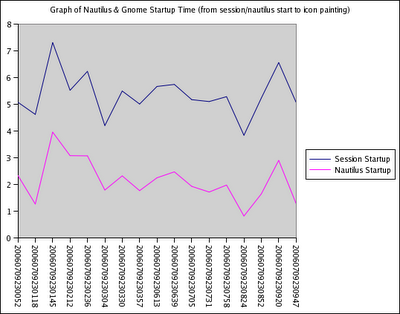

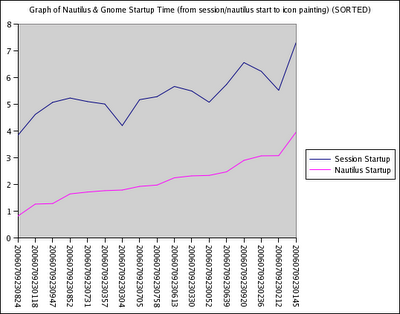

Example:

Here's the same thing sorted:

Look at how different the best and worst times are....

WHY?

gnome-session launches a whole bunch of things asynchronously. Nautilus starts a whole bunch of things asynchronously. These are all racing to finish, and some depend on each other.

Fastest run:

Slowest run:

Notice how a WHOLE bunch of processes startup between when nautilus first loads and the first icon is painted on the screen. (icon_container expose_event)

If we could prevent nautilus from blocking, or at least let it show something on the screen before all of these other threads start, things would be much more consistent.

NOTE: This totally sucks. Blogger is shrinking my pictures. My flicker account won't let me show the whole version unless I go "pro". I'll probably do that anyway.

Until I figure out what to do, simply look for the "red" in the picture. It indicates where new daemons were launched (exec'd). When they happen early in the run, it means that the nautilus startup is interrupted. When they happen late, it means that nautilus completed before they start.

What to ask?

The questions that I really want to answer are:

I removed both the window manager AND gnome-panel from the startup sequence to do my testing. I figure if I can remove the variability when only launching nautilus, then I should be able to add back in the others and make sure that things don't break.

WHAT?

Even with gnome-session ONLY launching nautilus in the gnone-session file, nautilus still has highly variable startup times.

gnome-session starts a whole bunch of things internally, and then launches nautilus.

NOTE: I am currently running with ALL of the stracing turned on, so times may be slower than previous blog posts.

Example:

Here's the same thing sorted:

Look at how different the best and worst times are....

WHY?

gnome-session launches a whole bunch of things asynchronously. Nautilus starts a whole bunch of things asynchronously. These are all racing to finish, and some depend on each other.

Fastest run:

Slowest run:

Notice how a WHOLE bunch of processes startup between when nautilus first loads and the first icon is painted on the screen. (icon_container expose_event)

If we could prevent nautilus from blocking, or at least let it show something on the screen before all of these other threads start, things would be much more consistent.

NOTE: This totally sucks. Blogger is shrinking my pictures. My flicker account won't let me show the whole version unless I go "pro". I'll probably do that anyway.

Until I figure out what to do, simply look for the "red" in the picture. It indicates where new daemons were launched (exec'd). When they happen early in the run, it means that the nautilus startup is interrupted. When they happen late, it means that nautilus completed before they start.

What to ask?

The questions that I really want to answer are:

- Why does nautilus block during startup? How can we stop it from blocking?

(It looks as if it is waiting for other pieces of gnome to startup) - What daemons have to be pre-started for nautilus to begin instantly? (or another way of looking at this is: What are dependencies does nautilus have on other pieces of gnome?)

It looks like gnome-volume-manager and gnome-vfs-daemon need to be started early. - How can the dependencies be started before everything else?

- Read through the raw strace logs.

This is pretty helpful, but it is alot of data. I have to study exactly what the nautilus threads were doing when they yielded the CPU. It would be really nice if we could record when a process/thread is switched off of the CPU. - Extend Federico's visualization tool to show the interesting information

I've currently extended it to show futex information, but it really generates an enormous image, and is very hard to see what is going on. However, I think it would be useful to somehow show the parent/child relationship for some of the processes. This would help determine the dependencies.. However, maybe this should be a different tool. - The NPTL trace tool

I just stumbled upon this, and I'm hopeful that this will give me some idea about how the various gnome threads interact.

Sunday, July 09, 2006

Cookbook

Reproducing

Alright, a few post back, people have been asking me how to reproduce the work that I've been doing.

First, I've be relying heavily on Federico's code to do the tracing/visualization. This requires that gnome is run within strace.

0) Boot your machine to init level 3.

This will allow us to run startx, run through the gnome initialization cycle, and then end without adding stuff to gdm.

1) Sprinkle the gnome code with calls to program_log.

First, I've created a header that I can include with the following code:

gnome-profile.h

Example:

In nautilus-main.c in the first non-declaration line of 'main()' I have added:

program_log("%s Starting_Nautilus",__FUNCTION__);

-and-

In nautilus-icon-container.c in the first non declaration line of 'expose_event()', I have added:

program_log("icon_container expose_event");

This is enough to time the nautilus startup.

NOTE: This implementation is pretty hacky right now. This should probably be pushed into a common library (glib?) somewhere, but right now it is handy because I don't have to rely on every application that I want to profile including a particular library. I just add the "#include" and drop in some calls to "program-log" and I am off to the races.

2) Add stracing to xinitrc.

When you launch gnome, you have to make sure strace is running, so I 've added calls to strace into my xinit file (for reasons described in previous posts) .

Add something similar to the following to your ".xinitrc":

Now you can launch gnome with tracing turned on:

4) Add a command to the session startup which will automatically teardown the session.

I've added the following shell script to my gnome-session startup: (You may have to adjust the initial sleep if things take longer than 5 seconds to startup.)

tear_down.sh:

Run "gnome-session-properties" an disable 'ask on logout".

Now, you should be able to run startx, have gnome start, and then exit back to the initial prompt.

6) Script the automatic timing and analysis of the startup/teardown.

First, I created a script to automatically determine the time to start the session and time to start nautilus and output that in a file called "summary":

(The script is called logs.py)

Next, I created a script to run this AND Federico's graphical analysis tool. It will create a directory with the time of the run, a copy of the log, and the picture of the execution.

I call it "test2.sh":

Now, you can run this for a long time, and just let gnome startup/teardown. After 40 or so runs, I stop the loop, and see what happened.

8) Analyze the results

Next I gather all of the results into a single file with:

9) (Extra) Prune the startup of the session.

Currently, I am just starting nautilus (no WM, and no gnome-panel).

I have a ~/.gnome2/session file that looks as follows:

That's all for right now. I found something interesting, but I'll save that for the next post.

ps. HI WIFEZILLA!

Alright, a few post back, people have been asking me how to reproduce the work that I've been doing.

First, I've be relying heavily on Federico's code to do the tracing/visualization. This requires that gnome is run within strace.

0) Boot your machine to init level 3.

This will allow us to run startx, run through the gnome initialization cycle, and then end without adding stuff to gdm.

1) Sprinkle the gnome code with calls to program_log.

First, I've created a header that I can include with the following code:

gnome-profile.h

#include <unistd.h>

#include <sys/time.h>

#include <stdlib.h>

static void program_log (const char *format, ...)

{

va_list args;

char *formatted, *str;

struct timeval current_time;

if (getenv("GNOME_PROFILING"))

{

va_start (args, format);

formatted = g_strdup_vprintf (format, args);

va_end (args);

gettimeofday(¤t_time,NULL);

str = g_strdup_printf ("MARK: %s: %s",g_get_prgname(), formatted);

g_free (formatted);

access (str, F_OK);

g_free (str);

}

}

In nautilus-main.c in the first non-declaration line of 'main()' I have added:

program_log("%s Starting_Nautilus",__FUNCTION__);

-and-

In nautilus-icon-container.c in the first non declaration line of 'expose_event()', I have added:

program_log("icon_container expose_event");

This is enough to time the nautilus startup.

NOTE: This implementation is pretty hacky right now. This should probably be pushed into a common library (glib?) somewhere, but right now it is handy because I don't have to rely on every application that I want to profile including a particular library. I just add the "#include" and drop in some calls to "program-log" and I am off to the races.

2) Add stracing to xinitrc.

When you launch gnome, you have to make sure strace is running, so I 've added calls to strace into my xinit file (for reasons described in previous posts) .

Add something similar to the following to your ".xinitrc":

"exec strace -e clone,execve,open,access -ttt -f -o /tmp/gnome.log /home/gnome/bin/jhbuild run gnome-session"3) Start GNOME with profiling turned on.

Now you can launch gnome with tracing turned on:

env GNOME_PROFILING=1 startxAfter this command has completed, an strace of the session will be sitting in tmp.

4) Add a command to the session startup which will automatically teardown the session.

I've added the following shell script to my gnome-session startup: (You may have to adjust the initial sleep if things take longer than 5 seconds to startup.)

tear_down.sh:

#!/bin/bash5) Turn off the "IS it ok to logout prompt"?

sleep 5

gnome-session-save --kill

sleep 5

killall X

Run "gnome-session-properties" an disable 'ask on logout".

Now, you should be able to run startx, have gnome start, and then exit back to the initial prompt.

6) Script the automatic timing and analysis of the startup/teardown.

First, I created a script to automatically determine the time to start the session and time to start nautilus and output that in a file called "summary":

(The script is called logs.py)

#!/usr/bin/python...

import sys

import string

found_event=0

for line in sys.stdin:

__if line.find("Starting_Nautilus")!=-1:

____start_time = string.atof(line.split()[1])

__if line.find('execve("/home/gnome/bin/jhbuild')!=-1:

____session_start_time = string.atof(line.split()[1])

__if (line.find("icon_container expose_event")!=-1) and found_event==0:

____expose_time = string.atof(line.split()[1])

____found_event=1

print "Start->Icon_Expose (ses):", expose_time-session_start_time, "(Naut):", expose_time-start_time

Next, I created a script to run this AND Federico's graphical analysis tool. It will create a directory with the time of the run, a copy of the log, and the picture of the execution.

I call it "test2.sh":

#!/bin/bash7) Run for infinity.

while /bin/true

do

DATE=`date +%Y%m%d%H%M%S`

mkdir $DATE

env GNOME_PROFILING=1 startx

cp /tmp/gnome.log $DATE/

~/plot-timeline.py $DATE/gnome.log -o $DATE/output-$DATE.png

rm -f /tmp/*.log

cat $DATE/gnome.log | ./logs.py | tee $DATE/summary

sleep 3

done

Now, you can run this for a long time, and just let gnome startup/teardown. After 40 or so runs, I stop the loop, and see what happened.

8) Analyze the results

Next I gather all of the results into a single file with:

"grep Nau */summary > results.csv"I can then load this file into gnumeric and graph the results.

9) (Extra) Prune the startup of the session.

Currently, I am just starting nautilus (no WM, and no gnome-panel).

I have a ~/.gnome2/session file that looks as follows:

[Default]...

num_clients=3

1,id=default1

1,Priority=10

1,RestartCommand=nautilus --no-default-window --sm-client-id default1

That's all for right now. I found something interesting, but I'll save that for the next post.

ps. HI WIFEZILLA!

Thursday, June 29, 2006

Bet ya bite a chip

I wanted to get something out earlier, but I've had a bit of nasty fever for the past few days. I promised in one of the blog comments to release the scripts that I am using for testing. I will do this very soon (probably in the next blog entry). I have some cleanup that I need to do.

fwrite->strace

In one of my past entries I mentioned that I was having trouble strace'ing startx, and that is what prevented me from using Federico's original patches. Well, that was beginning to bite me. First, my fwrite method did not handle threading very well. A message could get split a log was sent simultaneously by two different threads. (I think I could fix that though). Second, and more important, Federico's excellent visualization script wouldn't work.

I determined that figuring out how to get strace to work was really the only way to go.

First, it appears that the "SUID" problem was with strace and NOT with SELinux as I originally thought. According to the strace man-page it is possible to create a suid strace binary, and that will allow suid binaries to work properly. I created an suid-root strace, and that allowed me to "strace X". However, when I tried to launch gnome with this binary it gave me errors (some thing about "use a helper application for SUID stuff").

I had to take a different approach, so I changed my .xinitrc to launch an straced version of gnome rather than stracing startx directly. The suid-root X would start normally, and then the stracing would begin. That worked!

Here's my currently working .xinitrc:

.xinitrc

#!/bin/bash

exec strace -e clone,execve,open,access -ttt -f -o /tmp/gnome.log /home/gnome/bin/jhbuild run gnome-session

Notice that I added the "-e" option to Federico's default "-ttt -f" command line. This will ONLY record the clone, execve,open,access system calls. This speeds up the run-time, and reduces the amount of stuff that needs to be dumped in the log file.

All is not rosey

Unfortunately, now that am using strace, the start-up times seem a bit more sluggish. However, I have to update my scripts to automatically figure out how long things are taking. (I've just been reading log times manually.)

Also, and more importantly, my session will no longer shutdown by itself with strace turned on. I don't know if this is a bug in my distribution (with strace/ptrace) or an actual race within the gnome code. It makes it a real pain when I want to run 40 tests because I have to sit there and press cntrl-alt-backspace. Even then things don't get properly cleaned up. I am going to work on a big hammer to fix this.

1000 words

Federico suggested that I rearrange the order of nautilus vs. gnome-panel in the gnome-session file, and see if that changes the variability that I have been seeing. I don't have a definitive answer on that yet. However, I did notice that nautilus's execution does become interlaced with the gnome-vfs-daemon, and the gnome-panel. I wonder if it is possible to start the gnome-vfs-daemon before both of them, so nautilus won't have to wait for it to startup and/or compete with the panel for its resources. It would be really nice to know all of the dependencies of the gnome daemons. That way we could start the most needed ones earlier, and the non-conflicting ones in a parallel.

In Any event, here's a picture of my current startup. Note: most of the functions with a name of "(null)" are probably the gnome-vfs-daemon.

NOTE: The long pause at the end is because the shutdown of gnome has hung...

Note to ESD: DON'T EVER RUN

I noticed this before when I was shutting things down, but I saw it again on my logs, and it was really annoying me. I have esd completely disabled, yet on shutdown the esd process always starts.

I traced it down to "gnome-session-save". However, I don't yet know which part is calling it.

gnome-session-save calls this:

execve("/bin/sh", ["/bin/sh", "-c", "/home/gnome/gnome2/bin/esd -term"...], [/* 35 vars */]) = 0

which in turn calls this:

execve("/home/gnome/gnome2/bin/esd", ["/home/gnome/gnome2/bin/esd", "-terminate", "-nobeeps", "-as", "2", "-spawnfd", "15"], [/* 35 vars */]) = 0

This is the pebble in my shoe.

Cookbook

As I mentioned above, i don't yet have a cookbook that other people can follow in my footsteps and reproduce things. I will do that for the next blog. I need to do a little bit of clean-up, but then I can provide a patch.

fwrite->strace

In one of my past entries I mentioned that I was having trouble strace'ing startx, and that is what prevented me from using Federico's original patches. Well, that was beginning to bite me. First, my fwrite method did not handle threading very well. A message could get split a log was sent simultaneously by two different threads. (I think I could fix that though). Second, and more important, Federico's excellent visualization script wouldn't work.

I determined that figuring out how to get strace to work was really the only way to go.

First, it appears that the "SUID" problem was with strace and NOT with SELinux as I originally thought. According to the strace man-page it is possible to create a suid strace binary, and that will allow suid binaries to work properly. I created an suid-root strace, and that allowed me to "strace X". However, when I tried to launch gnome with this binary it gave me errors (some thing about "use a helper application for SUID stuff").

I had to take a different approach, so I changed my .xinitrc to launch an straced version of gnome rather than stracing startx directly. The suid-root X would start normally, and then the stracing would begin. That worked!

Here's my currently working .xinitrc:

.xinitrc

#!/bin/bash

exec strace -e clone,execve,open,access -ttt -f -o /tmp/gnome.log /home/gnome/bin/jhbuild run gnome-session

Notice that I added the "-e" option to Federico's default "-ttt -f" command line. This will ONLY record the clone, execve,open,access system calls. This speeds up the run-time, and reduces the amount of stuff that needs to be dumped in the log file.

All is not rosey

Unfortunately, now that am using strace, the start-up times seem a bit more sluggish. However, I have to update my scripts to automatically figure out how long things are taking. (I've just been reading log times manually.)

Also, and more importantly, my session will no longer shutdown by itself with strace turned on. I don't know if this is a bug in my distribution (with strace/ptrace) or an actual race within the gnome code. It makes it a real pain when I want to run 40 tests because I have to sit there and press cntrl-alt-backspace. Even then things don't get properly cleaned up. I am going to work on a big hammer to fix this.

1000 words

Federico suggested that I rearrange the order of nautilus vs. gnome-panel in the gnome-session file, and see if that changes the variability that I have been seeing. I don't have a definitive answer on that yet. However, I did notice that nautilus's execution does become interlaced with the gnome-vfs-daemon, and the gnome-panel. I wonder if it is possible to start the gnome-vfs-daemon before both of them, so nautilus won't have to wait for it to startup and/or compete with the panel for its resources. It would be really nice to know all of the dependencies of the gnome daemons. That way we could start the most needed ones earlier, and the non-conflicting ones in a parallel.

In Any event, here's a picture of my current startup. Note: most of the functions with a name of "(null)" are probably the gnome-vfs-daemon.

NOTE: The long pause at the end is because the shutdown of gnome has hung...

Note to ESD: DON'T EVER RUN

I noticed this before when I was shutting things down, but I saw it again on my logs, and it was really annoying me. I have esd completely disabled, yet on shutdown the esd process always starts.

I traced it down to "gnome-session-save". However, I don't yet know which part is calling it.

gnome-session-save calls this:

execve("/bin/sh", ["/bin/sh", "-c", "/home/gnome/gnome2/bin/esd -term"...], [/* 35 vars */]) = 0

which in turn calls this:

execve("/home/gnome/gnome2/bin/esd", ["/home/gnome/gnome2/bin/esd", "-terminate", "-nobeeps", "-as", "2", "-spawnfd", "15"], [/* 35 vars */]) = 0

This is the pebble in my shoe.

Cookbook

As I mentioned above, i don't yet have a cookbook that other people can follow in my footsteps and reproduce things. I will do that for the next blog. I need to do a little bit of clean-up, but then I can provide a patch.

Thursday, June 22, 2006

We're hunting wabbits

I've been trying to track down the variability that I mentioned in the last blog entry. It is beginning to feel like a threading/scheduling/race problem.

When gnome is starting up, everything is competing for the CPU. 'gnome-panel' and 'nautilus' (among other things) are both trying to initialize at the same time.

So, I've noticed two different things.

In the slow case: nautlius needs to do some work, but somebody else (gnome-panel?) holds the lock, so nautlius sleeps a LONG time after it realizes this.

In the fast case: nautilus grabs the lock, and keeps going until it is ready to run. Another possibility: For the fast case, it also might be that the gnome-volume-monitor stuff has already been initialized before nautilus needs it. However, my instrumentation shows that in the fast case, nautilus is the FIRST thing to call the gnome-volume-monitor code, so that wouldn't explain things.

When gnome is starting up, everything is competing for the CPU. 'gnome-panel' and 'nautilus' (among other things) are both trying to initialize at the same time.

So, I've noticed two different things.

- The first call to g_signal_connect in 'nautilus_application_instance_init' is fast in the fast case, and slow in the slow case.

In particular, this call: 'g_signal_connect_object (gnome_vfs_get_volume_monitor (), "volume_unmounted", G_CALLBACK (volume_unmounted_callback), application, 0);'

takes a long time (1.3 sec) when things are going slowly, but is very quick (0.2) secs when things are fast. This functions appears to add one or more threads that do a bunch of work.

I've instrumented various functions in the file containing 'gnome_vfs_get_volume_monitor', and I can see that they are running, but I am not sure why. (I have yet to trace the execution from the signal connect to these functions actually running) - In the fast case, gnome-panel runs the code AFTER nautilus startsup. In the slow case, the gnome-panel execution is interspersed with the nautilus execution.

In the slow case: nautlius needs to do some work, but somebody else (gnome-panel?) holds the lock, so nautlius sleeps a LONG time after it realizes this.

In the fast case: nautilus grabs the lock, and keeps going until it is ready to run. Another possibility: For the fast case, it also might be that the gnome-volume-monitor stuff has already been initialized before nautilus needs it. However, my instrumentation shows that in the fast case, nautilus is the FIRST thing to call the gnome-volume-monitor code, so that wouldn't explain things.